Kernel method —its basics

The basics of a kernel method is explained and a kernel function is naturally introduced.

Intro

In a machine learning context, kernel method is a convenient method to analyze datasets, and it’s worthwhile to understand the math behind the method. The purpose of this article is to explain the basics of a kernel method.

Here we have a definition of a kernel function.

Suppose set X is an input space, the set of all possible input values. Then, the kernel function for a kernel method is a map from 2 input spaces to the set of real numbers. And a kernel function must be a positive semi-definite.

There are many types of kernel functions.

Linear kernel

When input vector x contains many zeros (like text data), a linear kernel can be used.

Polynomial kernel

It is used for classifying image data.

Gaussian kernel

This is a general-purpose kernel function used when there is no prior knowledge about the data.

Now we want to see how a kernel function is naturally introduced in the context of a kernel method.

Problem Setup

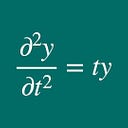

Suppose we have N data points

where x is a vector and t is a scalar. Now we want to apply a simple non-linear regression to the data points:

where w is the set of model parameters for the regression analysis, and phi(x) is a vector function of x. Then we define the loss function by:

where lambda is the parameter for regularization.

Kernel Method

Now we want to minimize the loss function. To do this, we first differentiate the loss function with regard to the model parameters w and equate it to zero. This allows us to have:

This means that we have the following expression for the model parameters :

Conveniently, we introduce a matrix Phi such that

to get a simple expression for the model parameters:

With the matrix, we can see that

and so we get the following expression for the loss function:

Then we introduce an N-by-N matrix K which is defined by

and we call it a gram matrix. The elements of the gram matrix are:

where k(x_i, x_j) is called the kernel function for this Kernel method. Also, we can express the loss function as

with the gram matrix.

Moreover, taking advantage of the fact that

we obtain

where I_N is the N-by-N identity matrix. Therefore the model parameters are expressed by the gram matrix, matrix Phi, parameter lambda, and the data point t.

Thus, we obtain the formula for the non-linear regression

where k is a vector vector function for an unknown point x:

that is

Example

I just put a graph as an example of a kernel method, where a Gaussian kernel is used, changing the parameter lambda. We can see that as the lambda decreases the inferred line tends to be fitted more to the data points.