Relation between Gaussian Process Regression and Kernel machine (short read)

A simple explanation as to how Gaussian process regression is connected to Kernel machine

Motivation

Gaussian process regression is often used and has many applications within the context of machine learning. Sometimes, it could be possible that we might not be quick to grasp how the gaussian process regression is related to kernel machine. So, in this short read, I explain how a gaussian process regression is connected to a kernel method.

Definition

First we look at the definition of a Gaussian process.

A process {y_i} is called a Gaussian process if the N-dimensional vectors y=(y_1, y_2, … , y_N ), for every collection of distinct points

and every positive integer N, have a multivariate normal distribution.

A Sketch

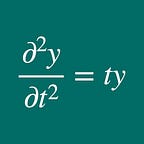

The definition for a Gaussian process shown above helps us to get an answer. Now let us consider the following simple model:

that is, y_i is a linear combination of the basis functions of x_i vector , where w vector is a weight vector. Then we consider the prior distribution of the weight vector, and a simple way is to use a multivariate normal distribution written as follows:

Now we assume that {y_1, y_2, … y_N} is a gaussian process. By definition, the following N-dimensional vector

has a multivariate normal distribution. And we choose it as

where the covariance matrix is

We’ve seen this before — that is, the components of the covariance matrix are the kernel functions.

So we’ve found that the Gaussian process regression is clearly related to a Kernel method.